CNN vs. RNN: Neural Networks for DSP

Advertisement

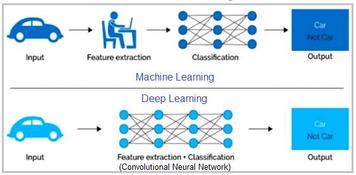

In the realm of deep learning for digital signal processing (DSP), Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) play crucial roles in handling various types of data and processing tasks.

Convolutional Neural Networks (CNNs)

CNNs are a specialized type of neural network primarily designed for processing grid-like data, such as images. These networks utilize convolutional layers to automatically and adaptively learn spatial hierarchies of features from input data. These layers apply convolutional filters to the input, capturing patterns such as edges and textures, which are crucial for tasks like:

- Image classification

- Object detection

- Image segmentation

CNNs also incorporate pooling layers to reduce dimensionality and computational complexity, as well as fully connected layers to perform classification based on the extracted features.

Example: A CNN might be used in image recognition systems to identify objects within pictures by learning and detecting various features such as edges, shapes, and textures.

Recurrent Neural Networks (RNNs)

RNNs are designed to handle sequential data by maintaining a form of memory through recurrent connections. Unlike traditional feedforward neural networks, RNNs have connections that loop back on themselves, allowing information to persist across different time steps. This makes them well-suited for tasks involving temporal sequences, such as:

- Language modeling

- Time series prediction

- Speech recognition

RNNs process data in a step-by-step manner, maintaining hidden states that capture context and dependencies from previous time steps.

Example: An RNN might be used in natural language processing to predict the next word in a sentence based on the context provided by previous words.

Difference between CNN and RNN

| Aspect | Convolutional Neural Networks (CNNs) | Recurrent Neural Networks (RNNs) |

|---|---|---|

| Primary Use | Primarily used for spatial data such as images. | Primarily used for sequential or time-series data. |

| Data Processing | Processes data through convolutional and pooling layers to extract spatial features. | Processes data sequentially, maintaining state information across time steps. |

| Architecture | Composed of convolutional layers, pooling layers, and fully connected layers. | Composed of recurrent layers with connections looping back to maintain state. |

| Memory | Does not inherently maintain memory of previous inputs; each image is processed independently. | Maintains memory of previous inputs through hidden states, capturing temporal dependencies. |

| Feature Extraction | Focuses on extracting spatial features like edges, shapes, and textures from data. | Focuses on capturing temporal patterns and dependencies across sequences. |

| Training Complexity | Typically requires training on large datasets with high computational resources for feature extraction. | Often faces challenges with vanishing and exploding gradients, requiring techniques like LSTMs or GRUs. |

| Example Models | AlexNet, VGG, ResNet (for image classification). | LSTM, GRU, standard RNN (for language modeling and time-series analysis). |

| Applications | Used in tasks like image classification, object detection, and image segmentation. | Used in tasks like language modeling, speech recognition, and time-series forecasting. |

Conclusion

Both CNNs and RNNs offer powerful tools for deep learning in DSP, each suited to different types of data and processing requirements. CNNs are particularly effective for spatial data and feature extraction tasks, while RNNs are optimized for sequential data and temporal analysis. Understanding their respective strengths and applications enables the development of more effective deep learning solutions tailored to specific DSP challenges.

Advertisement

RF

RF