10 Deep Learning Interview Questions and Answers

Advertisement

This document presents a list of questions and answers related to deep learning, covering key concepts like CNNs and RNNs, and their deployment on Digital Signal Processors (DSPs). It aims to provide a solid foundation for understanding and working with deep learning models in signal processing contexts. This resource can be helpful for job interviews for deep learning skill-based positions, as well as for engineering students during vivas.

Question 1: What is a Convolutional Neural Network (CNN), and how is it used in DSP applications?

Answer:

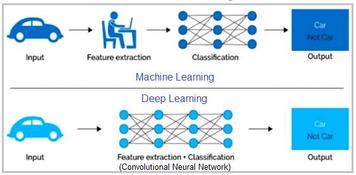

A CNN (Convolutional Neural Network) is a type of deep learning model specifically designed for processing and analyzing visual data. It employs convolutional layers to automatically and adaptively learn spatial hierarchies of features from input images.

Usage in DSP:

CNNs are used in DSP applications for tasks like image and video processing, object detection, and pattern recognition. In DSP, CNNs can be applied to tasks such as:

- Noise reduction

- Feature extraction

- Classification of signals and images

Key Features:

- Convolutional Layers: Extract features by applying convolutional filters.

- Pooling Layers: Reduce the dimensionality of feature maps.

- Fully Connected Layers: Perform classification or regression based on extracted features.

Question 2: What is the role of activation functions in CNNs, and can you name a few commonly used ones?

Answer:

Role of Activation Functions:

Activation functions introduce non-linearity into the CNN, allowing the network to model complex patterns and relationships in the data. Without activation functions, the CNN would essentially be a linear model, severely limiting its ability to learn complex features.

Common Activation Functions:

- ReLU (Rectified Linear Unit): - Commonly used for its simplicity and effectiveness in introducing non-linearity. ReLU helps to mitigate the vanishing gradient problem.

- Sigmoid: - Often used in binary classification tasks, providing an output between 0 and 1, representing probabilities.

- Tanh: - Used to scale outputs between -1 and 1, often used in the hidden layers of the network.

Conclusion:

Activation functions are crucial for enabling CNNs to learn and model complex patterns in data.

Question 3: What is a Recurrent Neural Network (RNN), and how does it differ from a CNN?

Answer:

RNN (Recurrent Neural Network):

An RNN is a type of neural network designed for sequential data. It has connections that form directed cycles, allowing information to persist and be used across different time steps. This makes RNNs suitable for tasks where the order of data matters.

Difference from CNN:

- CNN: Primarily used for spatial data (e.g., images) and captures spatial hierarchies through convolutional layers.

- RNN: Used for temporal data (e.g., time series, text) and captures temporal dependencies through recurrent connections.

Key Feature of RNNs:

- Memory Mechanism: RNNs maintain a hidden state that is updated across time steps, allowing the network to remember past information. This is crucial for understanding context in sequential data.

Question 4: What are LSTMs (Long Short-Term Memory networks), and how do they address the limitations of traditional RNNs?

Answer:

LSTM (Long Short-Term Memory):

LSTMs are a type of RNN designed to handle long-term dependencies and mitigate issues like vanishing and exploding gradients, which are common problems in traditional RNNs.

How They Address Limitations:

- Gates Mechanism: LSTMs use gates (input, forget, and output gates) to control the flow of information, allowing the network to retain relevant information over longer sequences.

- Cell State: Maintains a cell state that can carry information across long sequences, addressing the vanishing gradient problem. The cell state acts as a “memory” that can be updated or cleared as needed.

Conclusion:

LSTMs improve RNN performance on tasks involving long-term dependencies and sequential data, making them suitable for tasks like natural language processing and speech recognition.

Question 5: How are CNNs typically implemented on DSPs, and what are the key considerations?

Answer:

Implementation of CNNs on DSPs:

- Optimized Libraries: Use DSP-optimized libraries and frameworks (e.g., TensorFlow Lite for Microcontrollers) that provide pre-optimized operations for convolution, activation, and pooling layers.

- Efficient Computation: Leverage fixed-point arithmetic and SIMD (Single Instruction, Multiple Data) instructions to speed up convolution operations and reduce power consumption.

- Memory Management: Efficiently manage memory and data movement to fit the constraints of DSPs, including using techniques like data quantization and reduced precision.

Key Considerations:

- Resource Constraints: DSPs have limited computational resources and memory, so optimization is crucial.

- Real-Time Processing: Ensure that the CNN implementation meets real-time processing requirements.

Question 6: What are some common challenges when deploying deep learning models on DSPs?

Answer:

Common Challenges:

- Resource Limitations: DSPs have limited memory and processing power compared to general-purpose CPUs or GPUs.

- Optimization: Deep learning models, especially CNNs and RNNs, need to be optimized for efficient computation and memory usage on DSPs.

- Latency: Ensuring that models meet real-time processing requirements can be challenging due to computational complexity.

- Quantization: Converting floating-point models to fixed-point representations may affect accuracy and require careful tuning.

Conclusion:

Addressing these challenges involves optimizing models, managing resources efficiently, and ensuring real-time performance.

Question 7: How does quantization affect deep learning models when deployed on DSPs?

Answer:

Quantization:

Quantization involves converting floating-point weights and activations to lower-bit representations (e.g., 8-bit integers) to reduce model size and computational requirements.

Impact on Models:

- Reduced Precision: Quantization can lead to a loss of precision, potentially affecting model accuracy.

- Increased Efficiency: Quantized models are more efficient in terms of memory usage and computational speed, which is beneficial for DSPs with limited resources.

- Trade-Offs: Balancing accuracy and efficiency requires careful tuning and evaluation of the quantized model.

Conclusion:

Quantization helps deploy deep learning models on DSPs by making them more efficient but requires careful consideration of accuracy trade-offs.

Question 8: What is transfer learning, and how can it be applied to deep learning on DSPs?

Answer:

Transfer Learning:

Transfer learning involves taking a pre-trained model (typically trained on a large dataset) and fine-tuning it on a smaller, domain-specific dataset.

Application to DSPs:

- Pre-Trained Models: Use pre-trained CNN or RNN models and adapt them for specific tasks or datasets relevant to the DSP application.

- Fine-Tuning: Adjust the pre-trained model’s weights and parameters to fit the specific task or domain while leveraging the efficiency of the DSP.

Conclusion:

Transfer learning allows leveraging existing models and reducing training time and computational resources, making it suitable for deployment on DSPs.

Question 9: How does model pruning help in deploying deep learning models on DSPs?

Answer:

Model Pruning:

Model pruning involves removing redundant or less important weights and neurons from a deep learning model to reduce its size and complexity.

Benefits for DSPs:

- Reduced Model Size: Smaller models fit better within the memory constraints of DSPs.

- Faster Inference: Fewer computations are required, leading to faster execution and reduced power consumption.

- Simplified Computation: Pruned models can be optimized for efficient use of DSP resources.

Conclusion:

Pruning helps deploy more efficient models on DSPs by reducing size and computational demands while maintaining performance.

Question 10: What are some strategies to optimize RNNs for DSPs, given their sequential nature?

Answer:

Strategies to Optimize RNNs for DSPs:

- Quantization: Convert RNN weights and activations to lower-bit precision to reduce memory usage and computational demands.

- Simplified Architectures: Use simpler RNN architectures or variants, such as GRUs (Gated Recurrent Units), which can be more efficient than traditional LSTMs.

- Efficient Computation: Implement optimized operations for recurrent computations, leveraging fixed-point arithmetic and parallel processing capabilities of DSPs.

- Model Compression: Apply techniques like pruning and weight sharing to reduce model size and complexity.

Conclusion:

Optimizing RNNs for DSPs involves reducing precision, simplifying architectures, and leveraging efficient computation techniques to fit within resource constraints while maintaining performance.

Advertisement

RF

RF